The localization subsystem has two high-level tasks: to localize the follower relative to the leader and localize itself relative to the odom frame/start point.

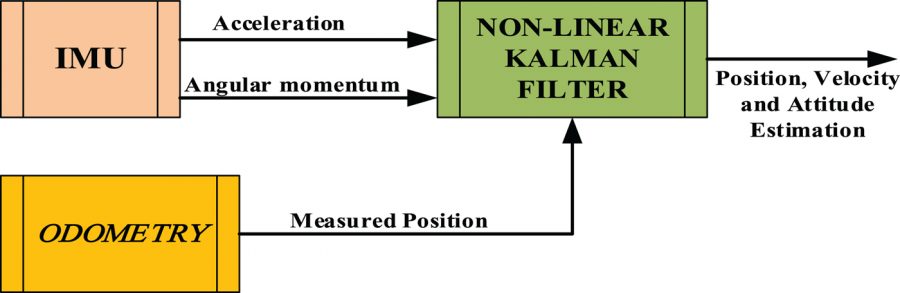

As we don’t have gps as well as no map of the environment, self-localization and odometry based dead-reckoning estimation are a backbone for our path following algorithm. We intend to use A well-established relative localization technique called dead-reckoning uses kinematic models on odometric data to compute the current position of a robot in reference to its starting position. Because of the limited precision of wheel encoders, and deviations from the intended route not observable by the sensors such as wheel slippage, error compounds with dead-reckoning, causing the accuracy of the pose information to degrade with time, especially because we working on ice. Therefore we implement a sensor fusion of IMU + Wheel Odometry to get better results. The result of combining data derived from different sensors can have the effect of reducing ambiguity and uncertainty, extending temporal and spatial coverage, and improving the resolution of robotic systems.

The odometry system provides a locally accurate estimate of a robot’s pose and velocity based on its motion. The odometry information can be obtained from various sources such as IMU, LIDAR, RADAR, VIO, and wheel encoders. One thing to note is that IMUs drift over time while wheel encoders drift over distance traveled, thus they are often used together to counter each other’s negative characteristics.

The odom frame and the transformation associated with it use a robot’s odometry system to publish localization information that is continuous but becomes less accurate over time or distance (depending on the sensor modalities and drift). To obtain consistently accurate odometry information over time, the map frame provides globally accurate information that is used to correct the odom frame.

We then use cascaded extended Kalman Filter to fuse the sensor data. These two sets of global coordinates are weighted against each other by an Extended Kalman Filter (EKF). The EKF estimates the current state of the system by forming and validating hypotheses on the system state with each round of new data. The Kalman filter ideally weighs the linear movement data from the wheel odometry and the rotational movement from the IMU strongly, while weighing the odometry rotation data and the IMU acceleration data weakly. In doing so, the design avoids some of the pitfalls of pure odometry or inertial systems, while providing better accuracy at the expense of the complexity of sensor fusion. The Kalman filter output coordinates are continuously transmitted to a base station, which receives the position and heading, and plots it with respect to the initial starting position.

For the ATV platform, we used the GPS for localization instead of the method descriped above. That was because the ATV we used was an outdoor vehicle with GPS Installed.

For the Zamboni ice resurfacer platform, We used the IMU data from Xsens MTi IMU and the wheel motor rpm as inputs for the localization subsystem.